Last updated on May 21, 2017

It’s rare that I can combine my two primary interests–applied econometrics and food policy–in one post, and I am particularly happy to be able to do just that with this post.

In 2014, Nathan Nunn and Nancy Qian published an article in the American Economic Review where they purportedly showed that US food aid deliveries caused conflict in recipient countries. Here is the abstract of their article:

We study the effect of US food aid on conflict in recipient countries. Our analysis exploits time variation in food aid shipments due to changes in U.S. wheat production and cross-sectional variation in a country’s tendency to receive any U.S. food aid. According to our estimates, an increase in U.S. food aid increases the incidence and duration of civil conflicts, but has no robust effect on inter-state conflicts or the onset of civil conflicts. We also provide suggestive evidence that the effects are most pronounced in countries with a recent history of civil conflict.

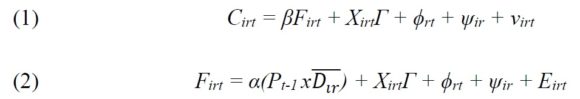

Specifically, Nunn and Qian estimate the following two-stage least squares specification:

where C is conflict in country i in region r in year t; F is food aid deliveries; X is a vector of controls; P is US wheat production in the previous year; D is a country’s propensity to receive US food aid (i.e., the proportion of all the years in the sample for which that country has received food aid); the phi and psi terms are respectively region-year and country fixed effects; and nu and E are error terms with mean zero.

Note the clever statistical trick Nunn and Qian use: They interact two variables, one of which is plausibly exogenous, in order to identify the effect of F on C. Specifically, US wheat production in the previous year P (which only varies over time) is interacted with a country’s propensity to receive US food aid D (which only varies cross-sectionally), the argument being that the former is plausibly exogenous to conflict, and so interacting it with the latter can create a valid IV for US food aid receipts. Doing so, Nunn and Qian find that US food aid deliveries seem to cause conflict in recipient countries.

But in a new working paper titled “Revisiting the Effect of Food Aid on Conflict: A Methodological Caution,” Paul Christian and Chris Barrett show that the identification strategy pursued by Nunn and Qian leads to spurious findings in their specific context, i.e., that US food aid does not cause conflict.

More generally, Christian and Barrett make the methodological point that this type of identification strategy “is susceptible to violations of the exclusion restriction arising from heterogeneous nonlinear trends analogous to violations of the parallel trends assumption in standard difference-in-differences estimation.”

Here is their abstract; the emphasis is mine:

An increasingly popular panel data estimation strategy uses an instrumental variable (IV) constructed by interacting an exogenous time series or spatial variable with a potentially endogenous cross-sectional exposure variable to generate a continuous difference-in-differences (DID) estimator that identifies causal effects from inter-temporal variation in the IV differentially scaled by cross-sectional exposure. This strategy is susceptible to violations of the exclusion restriction arising from heterogeneous nonlinear trends analogous to violations of the parallel trends assumption in standard DID estimation. Using this strategy, Nunn and Qian (2014) claim to identify a causal effect of food aid shipments on conflict in recipient countries. We show that under the influence of spurious temporal and endogenous cross-sectional components of food aid allocations, the 2SLS strategy virtually always identifies a positive effect of aid on conflict, even if the within year variation that underlies the strategy is randomly assigned across countries. Monte Carlo analysis shows that this upward bias can persist even if food aid reduces the incidence of conflict. These findings serve to correct an erroneous policy implication; more generally, and provide a cautionary tale for authors using similar identification strategies based on DID-type IV estimators in panel data.

The abstract does not do justice to Christian and Barrett’s full argument, and I cannot possibly do so in a 700-word post. Interested readers should read the full paper, which is very short (17 pages at 1.5 spacing; the pdf makes it seem much longer because of the appendices).