Most econometrics students learn about type I and type II errors. A type I error consists in incorrectly rejecting a null hypothesis when it is true; a type II error consists in incorrectly failing to reject a null hypothesis when it is false.

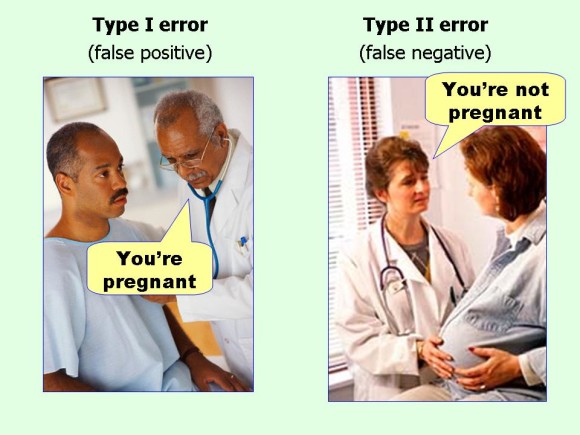

Or, if you are one of those people who, like me, is on the slow end of things and always has to look up their definitions, here is a nifty cartoon to learn the difference between type I and type II errors:

What is this nonsense about type III errors, then? In his Guide to Econometrics, Kennedy wrote:

A type III error occurs when a researcher produces the right answer to the wrong question. A corollary of this rule is that an approximate answer to the right question is worth a great deal more than a precise answer to the wrong question.

Here is a useful set of slides on type III (wrong question, right answer) and type IV (right question, wrong answer) by Charlotte Ursula Tate, from San Francisco State University.

How common are type III errors? And what does a type III error look like in practice? There is no hard evidence on the extent of type III errors beyond the anecdotal, but how often do we run into applied researchers who are bent on applying a specific technique to a given problem? (To be fair, this is common in the applied econometrics literature, wherein researchers often develop a new estimator and apply it to suitable data for the purposes of illustrating said estimator; I have been guilty of this in the past myself.)

Worse, how about those cases where a (typically young, pre-tenure) researcher insists on toeing the “randomize or bust” party line, a situation which I have discussed here?

Relatedly, I remember sitting in a seminar wherein a colleague asked “How did you come to work on this topic?” to the presenter, who had just shown us findings behind which the causal story appeared tenuous to my colleague. When the presenter said “Well, I noticed that I had this source of exogenous variation in my data, so I decided to look for an outcome to apply it to…,” he lost about half of the audience. I guess the lesson for younger researchers here is to make sure you have a darn good story for why your empirical setup is interesting, and why it matters for policy, business, or other.

How do you protect yourself from making type III errors? As with many other things in the craft of applied econometrics, the answer is not contained in a theorem or lemma. Rather, the answer is to talk to colleagues about what you are doing, and to see whether what you are doing passes the laugh test.** Though you should often expect a certain amount of skepticism when you first explain what you are doing (after all, most of the obvious research questions have already been answered), you should be able to dissipate said skepticism pretty quickly with facts. So in a typical regression of Y on D, that means explaining how much the effect of D on Y costs to society, how many people it affects, how much it shortens life expectancy, etc.

* I’m ignoring type IV errors in this post since almost every other ‘Metrics Monday post has been dedicated to “right question, wrong answer”-type topics, such as endogeneity issues, which cause bias, which is a way to provide a wrong answer to a question, whether that question is right or wrong.

** Unless you live in Minnesota. Then the laugh test is the different test. If a Minnesotan says “That’s different!” when you explain your research question to him or her, you’re in trouble.