Last updated on April 1, 2018

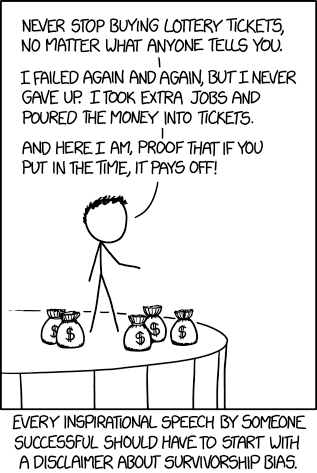

Wikipedia defines survivorship bias as

the logical error of concentrating on the people or things that made it past some selection process and overlooking those that did not, typically because of their lack of visibility. This can lead to false conclusions in several different ways. It is a form of selection bias.

The concept of survivorship bias was new to me until a doctoral student with whom I am working on yet another paper on contract farming–the economic institution wherein a processing firm delegates the production of an agricultural commodity to grower households–brought it to my attention when we discussed Ton et al.’s (2018) excellent meta-analysis of the impacts of contract farming, in which the authors report that published estimates of the impacts of participation in contract farming on household welfare are “… upward biased because contract schemes that fail in the initial years are not covered by research.”

At first, I thought this was a pretty important empirical issue. But after thinking about it for a few days, survivorship bias struck me as only important for some applications, but not for others.

Who Does the Treatment Target?

Let’s take a fictitious (and highly contrived) example. Suppose you develop a vaccine that has the potential to stop the process of aging. Save for a handful of anti-vaxxers, this is presumably something that most individuals would be interested in getting. Suppose you run a clinical trial of the vaccine. You have a sample of 100 individuals; you allocate 50 individuals to the vaccine treatment, with the remaining 50 in the control group, and you wait two years to see what happens.

After two years, your research assistant reports: “The vaccine works in 90 percent of cases.” Upon closer inspection, you realize that this is true, but only for the 50% of the people who were originally in the treatment group–the rest having dropped out of the sample.

Upon even closer inspection, you realize that the people who dropped out of your sample did so because they died, and that no one dropped out of the control group. This constitutes pretty good evidence that the vaccine kills some people.

What this means is that the vaccine you developed is only 45 percent effective, i.e., it works for 90 percent of those individuals it does not kill. So the average treatment effect of 90 percent reported by your research assistant is upward biased because of survivorship bias.

Let’s take another example. Suppose you are interested in the effects of participation in contract farming on the income of grower households. Assuming you have a research design where you can estimate a causal impact, imagine that you find that participation in contract farming is associated with a 30% increase in household income.

At a conference where you present your findings, your discussant says: “That’s great, but your estimate suffers from survivorship bias; you only observe those for whom the institution has worked, which presumably means that you’re not observing all those who dropped out because it wasn’t raising their income…”

Your discussant is not wrong. But “not wrong” does not necessarily mean “relevant.” Specifically, for survivorship bias to actually matter, it has to be the case that the treatment being discussed is intended for everyone or that it will be administered to randomly selected individuals.

In my examples above, the vaccine against aging is something that would be intended for everyone, but contract farming is not for everyone–processing firms get to decide which households they want to contract with, and they do so in nonrandom ways, and not all households are interested in participating in contract farming. In the former case, the treatment is intended for the entire population; in the latter case, the “treatment” is only intended for those who (think they) can make it and for those whom the processing firms select.

In both cases, survivorship bias threatens the external validity of your results, and it means you should be cautious about whatever policy recommendations you make on the basis of your results. This is especially so in cases where an intervention is likely to only “work” for a subset of a population: contract farming, to be sure, but also technology adoption, microfinance loans, index insurance, and so on.